LegaSea 2.0

AI & Data

Semester programme:Master of Applied IT

Research group:Sustainable Data & AI Application

Project group members:Megan Spielberg

Sacha Ingemey

Project description

The primary design challenge of LegaSea is integrating various types of information (photos, text, expert knowledge, and user-submitted data) into a single, clear, and user-friendly system. The project must help both experts and citizen scientists identify fossils, improve photo quality, and understand AI decisions. At the same time, the system needs to work in real time, be transparent, and avoid “black box” behaviour. Combining all these needs into a single platform, while maintaining speed, reliability, and user-friendliness, is the biggest challenge.

Context

LegaSea is a research project coordinated by the Naturalis Biodiversity Center that aims to reconstruct Dutch Ice Age (Late Pleistocene) biomes and landscapes using an AI-assisted citizen science approach. The project focuses on the North Sea Basin, where hundreds of thousands of late Quaternary vertebrate fossils and artifacts can be found on sandy beaches. By identifying these remains, researchers hope to enhance understanding of vertebrate evolution, the history of hominin occupation, and the causes of megafaunal extinctions in northwestern Europe.

Results

The LegaSea project delivered two important outcomes: an explainable AI system for fossil identification and a real-time feedback prototype that improves fossil photography. Both products were validated in controlled studies and can be positioned between TRL 4 and 5.

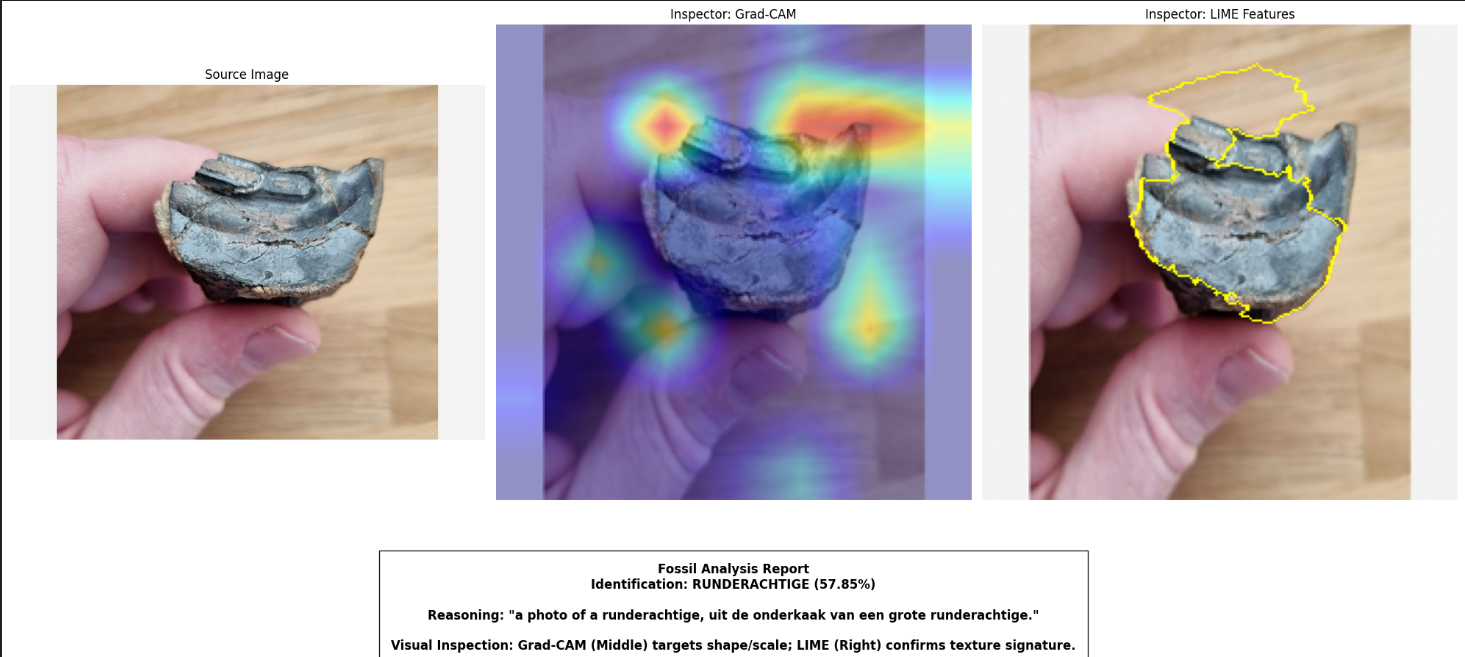

The explainable AI system combines three agents: one for identification, one for natural-language reasoning, and one for visual explanations. This produces a clear, human-readable chain of thought that helps experts and citizen scientists understand why the system makes certain predictions. Validation showed that the system can correctly identify common fossil types and expose hidden model biases, such as relying on scale bars instead of real fossil features. This insight is extremely valuable, because it proves that confidence scores alone are not trustworthy without transparency. Latency tests confirmed that fast explanations like Grad-CAM are suitable for field use, while heavier methods like LIME are better for expert review. The system works reliably in a lab setting and demonstrates modularity and scalability, placing it at TRL 4–5.

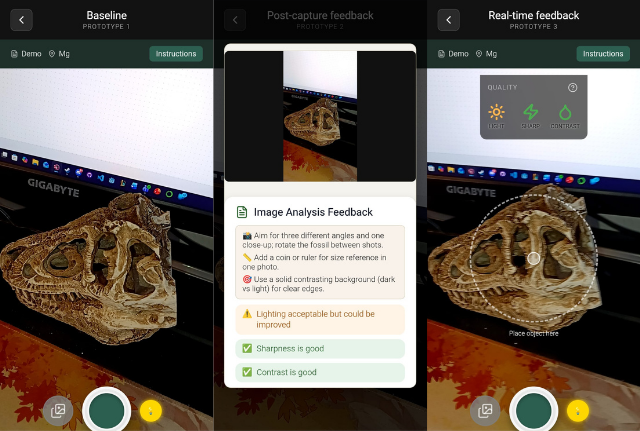

The second outcome is a set of mobile photography prototypes that test whether feedback can improve citizen-scientist image quality. Three versions were developed: no feedback, post-capture feedback, and real-time feedback during image capture. A user study with 20 participants and 183 images showed that real-time feedback significantly improves photo contrast and is rated as the most usable system. Post-capture feedback had little effect, confirming that quality issues must be corrected while the user is taking the photo, not afterwards. These findings also highlight a major insight: improving the quality of photographs at the moment of capture directly reduces expert workload and increases the reliability of machine-learning models that depend on these images. This prototype has been validated in a controlled environment, placing it at TRL 4.

Together, these outcomes provide strong evidence that LegaSea can benefit from both improved data capture and transparent AI support. High-quality photos increase the scientific value of citizen submissions, while explainable AI ensures that automated identifications are trustworthy and interpretable. Both products demonstrate clear practical value and offer a solid foundation for future development and field integration.

About the project group

Sacha and I both completed our Bachelor's degree in software engineering at Fontys Venlo. We worked on the LegaSea project for one semester, meeting weekly with stakeholders and working iteratively.